The introduction of Co-Producer by Output exemplifies a new era for music and audio production. We are at a watershed moment–AI is everywhere and regardless of your field of expertise, there is little doubt that it will affect your life and career in one way or another if it hasn’t already. The world of music and audio production has seen huge changes in intelligent audio processing and content generation driven by AI. One of the companies that is at the forefront of AI development is the LA-based software developer, Output. I’ve been a huge fan of the company for many years and have reviewed several of their instruments and audio effects plugins. Since the launch of their ground-breaking sampling instrument, Arcade, it was clear the company was looking toward the future of audio, particularly after the introduction of the AI-assisted Kit Generator in the latest Arcade version.

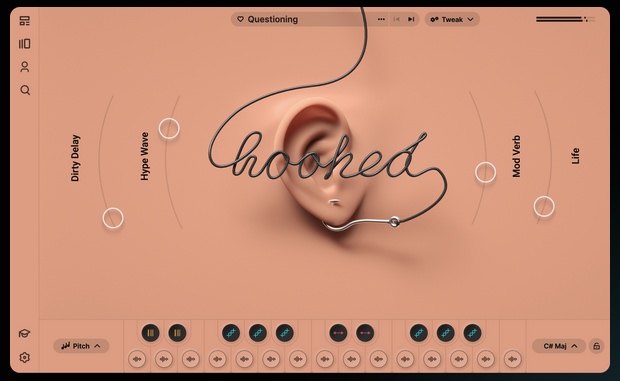

Output instruments and effects are aesthetically and sonically stunning and it is clear that their general approach is to create products that inspire by engaging users through aural and visual means. But after the initial intoxication of the visual gymnastics wears off, music creators need to have a solid working environment for creating sound that encourages ongoing discovery and experimentation. In this regard, Output has excelled.

CO-PRODUCER BY OUTPUT

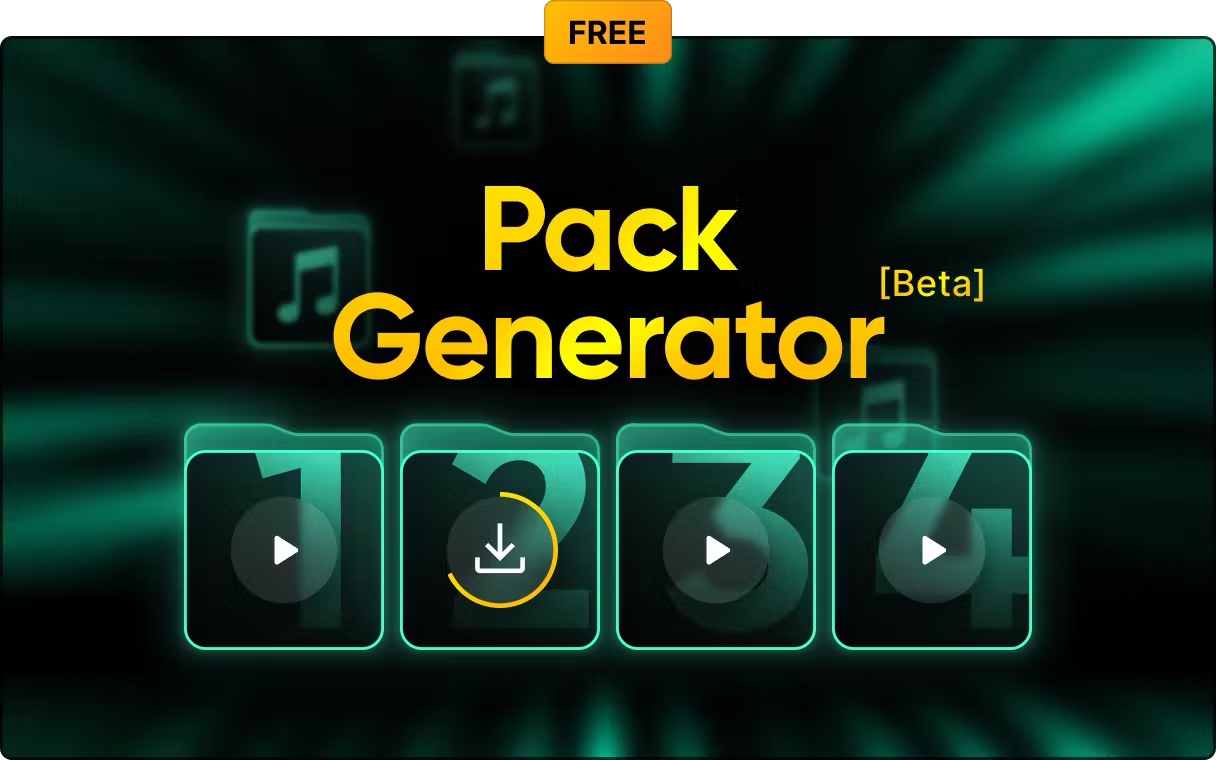

When I first heard about their new project, Output Co-Producer, I was excited to learn more about how this innovative developer was navigating the AI landscape in terms of content generation. The first component of Co-Producer–Pack Generator, is currently in beta testing and is free to experiment with.

The software will generate sample packs based on user language prompts, similar to the way image-based software like Midjourney operates. This concept of content generation from language all started with OpenAi’s launch of ChatGPT and DALL E. It is hard to believe that it hasn’t even been 2 years since ChatGPT was released and now language-based AI models can be found literally everywhere. It reinforces the concept of exponential growth in technology as it builds on itself and accelerates toward what Ray Kurzweil termed, the singularity, “the point at which machines’ intelligence and human’s merge”. When this occurs, no one can predict what will happen. Since his landmark book in 2005, The Singularity is Near: When Humans Transcend Biology, Kurzweil has updated his predictions that originally estimated the singularity to occur in 2029. His new book, due to be released in June 2024, is called The Singularity is Nearer: When We Merge With AI, so hold on to your hats!

INTERVIEW WITH SPENCER SALAZAR

Output is one company that has fully embraced the possibilities of AI for music production. So with many burning questions, I reached out to Spencer Salazar, one of the developers behind Co-Producer, to clarify and expound on Output’s approach to AI.

PHILIP MANTIONE: The name “Output Co-Producer” seems to say a lot about your approach to AI. That being an artist-centric approach where AI doesn’t replace the writer but creates potential content as a collaborator would. Could you elaborate more on the big picture you envision for this sort of human/machine collaboration?

SPENCER SALAZAR: Absolutely. You can look back at the history of music technology and see these kinds of technological developments being a vital collaborator and leading to entirely new kinds of musical genres and practices. The vinyl recording, the synthesizer and drum machine, the DAW, these all made music “easier” in some ways but also opened up tons of creative possibilities. I think we’re at the very beginning of something similar with artificial intelligence. My thesis is that people like to deeply engage with a creative process; AI that gives instant creative gratification won’t be that interesting to a large audience in the long run.

PM: I played around a bit with Pack Generator, described on your website as the “first Co-Producer feature”, and was thoroughly impressed, particularly with the sample packs that were generated. I tried a prompt “analog sequencer with a Minimoog vibe” and it generated stems with a tempo of 126 in the key of B Minor. I’m curious as to how that was determined. Was it random or does the algorithm base tempo and key sig on certain words that might imply a genre?

SS: Appreciate that. So, it’s not random, the key and BPM of the resulting audio is selected according to specifications of the internal model, based on the genre and any other cues in the prompt. For instance, if there is a typical range of BPMs for a genre specified in the prompt, it will aim to match that; if another language in the prompt suggests a general BPM, it will gravitate towards that. “Thrilling synth-driven music” will tend towards a faster tempo, and “laid back surf rock” will tend towards slower.

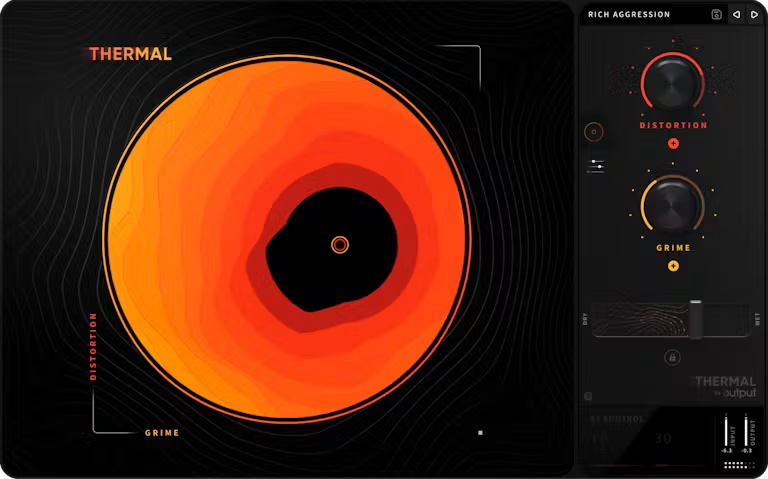

PM: While many of the stems in the sample pack sound good in isolation, I found the demo mixes to be pretty rough, overly-squashed, and a bit harsh in some cases. I don’t expect any artist would simply slap stems together without any adjustment right out of the pack. But I wonder if more work is being done to generate better mixes to start with or pre-configured multitracks. Or is the focus of this first iteration more about individual elements?

SS: That’s a fair critique. Typically, Output products (including Co-Producer) are used for creative inspiration in the early-to-middle stages of the songwriting process, whether it’s writing hooks, arranging basslines, or adding texture and depth to an existing song. So, without discounting future enhancements like you’ve suggested, that was the obvious starting point for us.

PM: I’ve toyed with some AI visual art generators like Midjourney and Firefly, and in those applications, there are four examples generated to start with as with Co-Producer sample packs, but you are then free to choose one of the four to generate similar images. Is this something in the works at Output?

SS: We’ve thought a lot about this, and from the start, we’ve been inspired by the workflows of the tools you mentioned. In my experience, the creative process is a lot of iteration and editing. I grew up working with film photography, and the common wisdom is that you typically get one great shot out of a film roll of 36 exposures, and there are clear parallels to this with AI-based creative work. Co-Producer offers something similar, where if you keep clicking “generate 4 new samples” it will continue to explore the space of your prompt, while trying to avoid recreating any existing ideas that it already provided.

PM: Is the content generated based purely on a sample library, are sounds being generated from scratch, or is it more of a hybrid approach?

SS: Every sound you hear in Co-Producer Pack Generator is based on a real sample from Output’s library. The AI matches, combines and re-synthesizes new samples from our meticulously crafted royalty-free library to generate entirely unique sample packs.

PM: Has there been any thought given to including MIDI generation as well as audio samples? The possibilities with MIDI 2.0 seem like fertile ground for AI-assisted content generation.

SS: Absolutely. Output has an immense library of chromatic instruments, both in Arcade and going back to products like Rev, Exhale, and Substance. We’d love to connect those instruments to generated melodies, chord progressions, bass lines, etc. MIDI generation also opens up a new dimension of creative possibilities beyond loops.

PM: It seems that most AI generative software in the visual arts and music are strictly online right now–using Discord, web browsers, or proprietary interfaces such as Co-Producer. Do you see this to be a paradigm shift where all software will someday be online and subscription-based as opposed to downloadable applications?

SS: One of our goals with launching Co-Producer was to get the ideas behind the product out into the world and see how people would use and respond to it, with our creator-first vision behind it. The web is a natural environment for making creative tools widely accessible, so that route made the most sense. but if you want to connect with professional users and workflows, you have to operate in the desktop world.

With audio and music, the DAW is where this is done, so it’s inevitable that tools will work in that context if they’ll be lasting and successful. At the same time, most AI tools are using extremely sophisticated models that are challenging to build into a desktop product, and so some form of cloud processing is required in the background. Unless you’re a tech giant, subscription pricing is the only way to make that work from a business perspective.

PM: The Co-Producer license grants the user “a royalty-free…non-exclusive, non-transferable, perpetual right to use the Samples in new original recordings and/or compositions such as music or video productions”. There have already been infringement cases brought by writers and visual artists against AI image-generating companies. And even production music libraries now have stopped using tags with the names of known composers due to copyright issues. I tried the prompt, “Philip Glass piano”, just to see what would happen and the result was more about “glass” sounds than the style of the composer. What sort of prompts are currently allowed in Co-Producer and how were they developed?

SS: It’s a thorny area. AI is having a Napster moment where the potential of this new technology must be reconciled with the damage it may do to artistic communities. Output’s roots are in the film music industry and we have strong connections to many musical communities, both professionally and personally, so we’re dedicated to doing what we feel is right by the communities who’ve gotten us this far.

One of my top professional goals throughout my career has been to give people new creative worlds to explore, rather than facsimiles of existing ideas; Output’s legacy products do exactly that, and my hope is that Co-Producer will do the same. All this in mind, there are no limitations around certain kinds of music being made in Co-Producer, but we also haven’t trained our algorithms on other people’s music.

PM: The software is currently free and in Beta right now–do you have any idea of what subscription rates will be?

SS: We’re still dialing in a lot of this as we continue to understand the value people are getting from Co-Producer. What we have seen is that people get different kinds of value out of Co-Producer, with some folks who really enjoy it and use it for days on end, whereas some folks might use it a few times and move on.

PM: What can users expect in the 2nd iteration of Co-Producer?

SS: We can’t say too much about exactly what’s coming – but know that it’s indeed coming. For the next phase of Co-Producer, our team is thinking about how to leverage AI to spark creativity in not only the beginning stages – but also the middle stages – of the music creation process. Stay tuned for updates from us later this year.

CONCLUSIONS

I want to offer special thanks to Spencer Salazar for his time and thoughtful responses. If you haven’t tried Co-Producer yet I highly encourage you to do so. As mentioned, it is currently free, but nothing remains free forever. Take some time and explore what’s possible and how it may fit in your workflow. Describing sound (or imagery) with text prompts might come naturally to some people, but it is more involved than you might think and is quickly becoming an art form in itself. The better you get at prompting, the better the results.

It’s an amazing time to be alive and whether your view is optimistic or dystopian, rest assured the future is coming like it or not. I just wish developers would focus more on flying car technology–I’ve been looking forward to zipping around like a Jetson since the 60’s.

EXTRAS

Enter the Waveinformer ReMix Contest currently in process for a chance to win the Pitch Innovations Bundle and other prizes from Pitch Innovations and Cableguys. Deadline is April 6, 2024. See details here.

Assess your knowledge of essential audio concepts using our growing catalog of online Quizzes.

Explore more content available to Subscribers, Academic, and Pro Members on the Member Resources page.

Not a Member yet? Check the Member Benefits page for details. There are FREE, paid, and educational options.