Anyone who has studied psychoacoustics or the science of sound knows that the Haas effect is a fascinating byproduct of our sensory perception. The human hearing mechanism and the brain interact in ways that have evolved to benefit our survival. Psychoacoustic phenomena such as the phantom stereo image, the missing fundamental, Shepard-Risset glissandi, and frequency masking, underlie many audio processing applications that rely on an understanding of the difference between measurable sonic events and what we perceive. In this article, I discuss the Haas Effect and its ramifications in audio production and sound design.

WHAT IS THE HAAS EFFECT?

The Haas Effect, also known as the Precedence Effect, was named after Helmut Haas around 1949, who described it in his Ph.D. thesis. Simply put, it states that our perceived localization of a sound is a function of what reaches our ears first. Lothar Cremer also wrote about the Law of the First Wave Front in 1948, as did psychologist, Hans Wallach and the ideas have been around as far back as 1851 in John Henry’s piece, “On the Limit of Perceptibility of A Direct and Reflected Sound.”(Gardner).

“Less frequently, the terms “law of the first wavefront,” “auditory‐suppression effect,” “first‐arrival effect,” and “threshold of extinction” have also been used in describing certain of these characteristics.” (Gardner)

So why is it so often referred to as the Haas Effect? Well, he may have been the first to perform practical experiments that would be the foundation for many audio-processing applications to come. Or maybe it just sounds cooler to say Haas Effect than the Precedence Effect or the Wallach Effect. I’ll let the scholars fight it out.

TERMINOLOGY AND RELATED CONCEPTS

When a sound is produced in a space like an auditorium, our hearing mechanism and brain work together to fuse the direct sound from the source and all the reflections into one cohesive sonic experience. Within the first 35ms (or 35 thousandths of a second) our system of perception integrates these sounds and provides us with a sense of direction or location dictated by the first sound that reaches our ears. In actuality, reflections are coming from all directions, and under normal circumstances these reflections are at a lower volume and by definition, arrive later than the direct sound.

Two terms you may have come across that relate to this idea are:

Interaural Time Difference (ITD)

This is the time difference between when a single sound reaches our left ear as opposed to our right. It is often discussed along with HRTF or head-related transfer function which describes the acoustic shadow imposed by our head on one ear or the other and relates to our perception of location and elevation. (3031)

It is amazing that “humans have just two ears, but can locate sounds in three dimensions – in range (distance), in direction above and below (elevation), in front and to the rear, as well as to either side (azimuth). This is possible because the brain, inner ear, and the external ears (pinna) work together to make inferences about location”. (Head-Related)

Measuring ITD involves extremely small units of time–microseconds (μs) or millionths of a second. “Human listeners with normal hearing are exquisitely sensitive to ITDs, with reported detection thresholds below 10 μs under optimal conditions.” (Best)

Interaural Intensity Difference (IID) – or (ILD) Interaural Level Difference

This is the difference in intensity that we experience between the left and right ears based on the location of a sound source and is also related to HRTF described above. The smallest discernible level difference is considered to be 1dBSPL. (Learning)

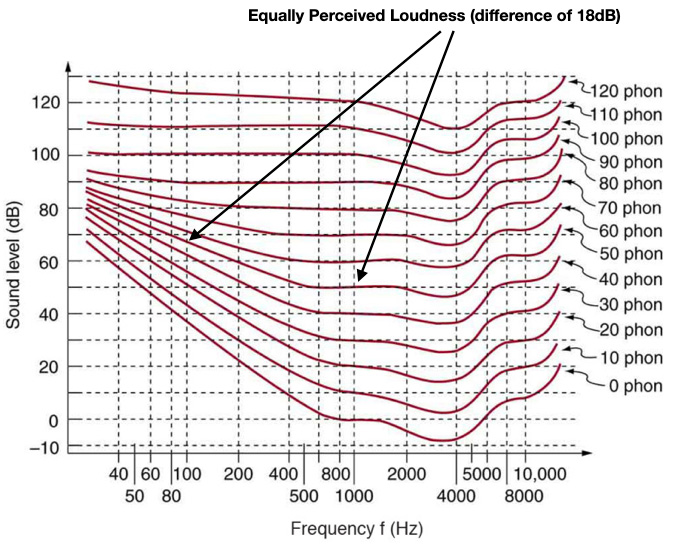

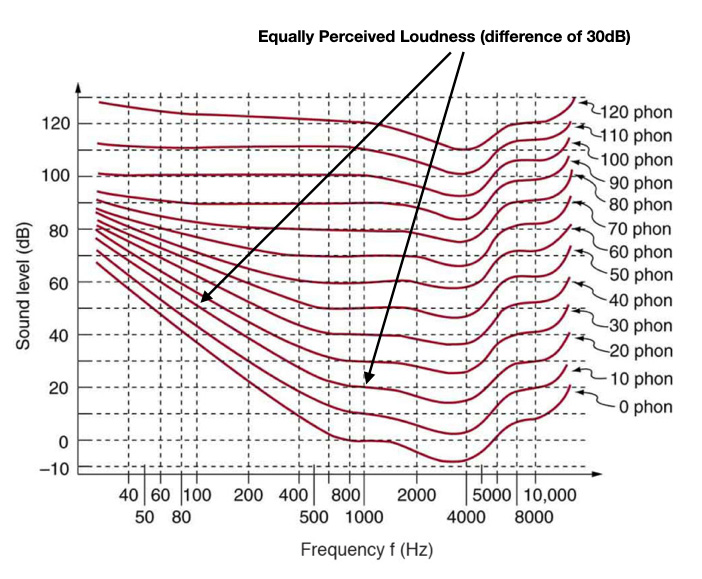

Equal Loudness Contours

The frequency range also has a huge impact on how we perceive level, as indicated by the Fletcher-Munson Equal Loudness Contours seen below. The contours show that our sensitivity changes across the frequency spectrum and is affected by the overall level.

1kHz tone at 20dB sounds equally loud as a 100Hz tone at 50dB (difference of 30dB )

As the level increases the contours get flatter so that:

1kHz tone at 50dB sounds equally loud as a 100Hz tone at 68dB (difference of 18dB)

Fletcher-Munson Equal Loudness Contours (Learning)

“Frequency has a major effect on how loud a sound seems. The ear has its maximum sensitivity to frequencies in the range of 2000 to 5000 Hz, so sounds in this range are perceived as being louder than, say, those at 500 or 10,000 Hz, even when they all have the same intensity. Sounds near the high and low-frequency extremes of the hearing range seem even less loud because the ear is even less sensitive at those frequencies.” (Learning)

THE HAAS EXPERIMENT

Haas placed his subjects 3m from a set of speakers playing at the same level on a rooftop so that an anechoic (without echo) situation could be approximated. The idea was to isolate the perception of the direct sound only, without reflections. The content was recorded speech and one speaker was delayed in relation to the other. Haas observed that when the delay was as little as 5ms, the subject perceived the sound as coming from the non-delayed speaker, a sort of panning effect. But as opposed to panning, where the level is different on the left and right, here the levels were identical.

See below for a more extreme example where a snare sound is delayed by just 10 samples, which at a 48k sampling rate equals just .2 ms. Even this minuscule delay is enough to cause a perceived directionality.

Within this 35ms range a direct sound is fused or integrated with the reflections in a room–sometimes referred to as the Haas Zone. (Everest 60) The level of the delayed source had to be increased by 10dB before it was heard as being distinct. You can think of this sonic fusion in terms of a visual analogy like the frames of a film. Although there are distinct images presented sequentially, it appears like one continuous moving image.

When the delay reaches 50 to 80 ms or so the sound is perceived as coming from a discrete location. But if you attenuate the delayed sounds the fusion zone or Haas zone will be extended. As the delay time increases discrete echoes will be perceived.

So all these elements–delay time, intensity, frequency range, interaural time difference, interaural intensity difference, and our sensory limitations combine to form our perception of sound and its location in space.

THE HAAS EFFECT IN AUDIO PRODUCTION

Live Sound Reinforcement

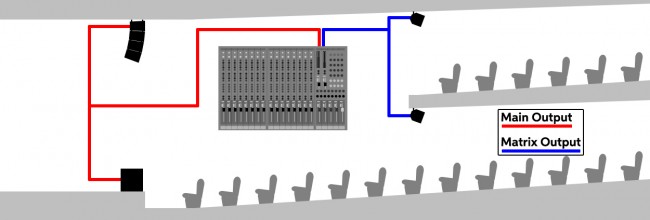

Delay lines (Stewart)

One application for the Haas effect involves enhancing the experience of live sound or sound in large spaces where directionality is important. For example, in a live concert, speakers may be positioned far from the stage to increase the level and quality of the sound for distant listeners. The signal for these remote speakers is delayed by an amount equal to the time it takes for the sound to travel from the stage to this location plus an additional 10-20 ms and at a level up to 10dB louder than the sound that would reach that location from the stage.

The result is a louder level for distant listeners while maintaining the perception that the sound is originating from the stage. Without the delay, the perception of location would revert to the distant speakers which may result in a listener perceiving the sound as coming from behind their position. With too much delay, a distinct echo would be heard. So the idea is to keep things in the Haas Zone, a fused and integrated sonic experience. Of course, this does not address the huge difference between the speed of light and the speed of sound. So when that guitar player rolls their eyes back for that big string bend, the listener in the cheap seats will still experience a delayed sound in relation to what they see through their binoculars. But until some sort of Haas glasses are developed we are at the mercy of the laws of physics and our financial limitations.

Stereo Widening

You can use the Haas effect to create a wider stereo sound. In the examples below I’m using the stock Sample Delay in Logic Pro to delay one of two identical tracks hard-panned left/right.

Side Note: The vocal excerpt below is from Chaos Is by Sweetbreads – the multitrack is being used for the first Waveinformer mix contest–details here.

Mono vocal line

Audio PlayerDual Mono panned hard left and right

Audio PlayerRight side delayed by 1ms

Audio PlayerRight side delayed by 3ms

Audio PlayerRight side delayed by 10ms

Audio PlayerRight side delayed by 20ms

Audio PlayerRight side delayed by 35ms

Audio PlayerRight side delayed by 50ms

Audio PlayerRight side delayed by 100ms

Audio PlayerTo help mitigate some phasing issues at smaller delay times you can add the original mono track to retain a sense of center while increasing stereo width.

Mono Vocal with Dual mono lines hard panned – right side delayed 10ms

Audio PlayerHere’s the same situation with the addition of tilt shelving filters using the FabFilter Pro-Q 3, boosting above 1500 Hz with slightly different gain settings on the left and right.

Audio PlayerHere’s the same situation with the addition of tilt shelving filters using the FabFilter Pro-Q 3, boosting below 1000 Hz with slightly different gain settings on the left and right.

Audio PlayerYou can use stereo wideners like the Ozone Imager or Kilohearts Haas effects to achieve similar results. And I could have used a stereo delay plugin on a single track. But there is something to be said for the kind of surgical control you can get with simple mono sample delay plugins, especially when you get creative with effects processing on the side channels.

From the examples above we can hear that the Haas Zone begins to deteriorate at delays above 35ms at which point we begin to hear distinct echoes. But in the sweet zone (around 10 – 20ms) we get a pleasant widening of the sound.

The effect is even more obvious with transient sounds like the snare sample below where distinct echoes can be perceived with as short as a 20 ms delay.

Mono Snare

Audio PlayerDual Mono with right delayed 10 samples which at 48k sample rate equals about .2 ms

Audio PlayerDual Mono with right delayed 3 ms

Audio PlayerDual Mono with right delayed 10 ms

Audio PlayerDual Mono with right delayed 20 ms

Audio PlayerDual Mono with right delayed 35 ms

Audio PlayerBelow are some Haas experiments using a drum track.

Mono Drums

Audio PlayerDual Mono with right delayed 15 ms

Audio PlayerDual Mono with right delayed 20 ms

Audio PlayerDual Mono with right delayed 35 ms

Audio PlayerDual Mono with right delayed 15 ms + Mono Original

Audio PlayerDual Mono with right delayed 35 ms + Mono Original

Audio PlayerThe biggest concern when using Haas effects is phase cancelation and comb filtering caused by introducing short delays to identical signals. This can create problems for mono compatibility and underscores the importance of mixing with reference monitors as opposed to headphones which may not identify these phasing issues as well. You should always check your mixes in mono as a standard practice and use a correlation meter to monitor phasing issues.

Ambience Extraction

Another Haas-related process is referred to as Ambience Extraction–a technique that employs the Haas effect in multichannel setups to isolate the ambience in a stereo recording by decorrelating ambient components sufficiently so they cannot be localized, leaving the foreground components positioned in the front. (Madsen) Of course, with true multichannel encoding like Surround sound and Dolby Atmos this sort of simulation is not necessary. There is a good article on the process of Ambience Extraction on the AES E-Library for members here.

CONCLUSIONS

The Haas effect is a fascinating psychoacoustic phenomenon that should be experimented with if you haven’t done so already. All psychoacoustics effects are byproducts of our sensory limitations and how we interact with our physical environment. But limitations can be a springboard for innovation and inspire creative thinking.

As Igor Stravinsky said on the subject of limitations: “…my freedom will be so much the greater and more meaningful the more narrowly I limit my field of action and the more I surround myself with obstacles. Whatever diminishes constraint, diminishes strength. The more constraints one imposes, the more one frees one’s self of the chains that shackle the spirit.” (source)

For more about psychoacoustics and ways to exploit our sensory limitations, check out the great sources below.

EXTRAS

Test your knowledge with the Haas IQ test.

See what’s new on our Member Resources page.

Not a Pro Member yet? Check the Member Benefits page for details. There are FREE, paid, and educational options.

REFERENCES

3031, Students of PSY, and Edited by Dr. Cheryl Olman. “Head-Related Transfer Function.” Introduction to Sensation and Perception, University of Minnesota Libraries Publishing, 1 Jan. 2022, pressbooks.umn.edu/sensationandperception/chapter/head-related-transfer-function/.

Ballou, Glen. Handbook for Sound Engineers. Focal Press, 2015.

Best, Virginia, and Jayaganesh Swaminathan. “Revisiting the Detection of Interaural Time Differences in Listeners with Hearing Loss.” The Journal of the Acoustical Society of America, U.S. National Library of Medicine, June 2019, www.ncbi.nlm.nih.gov/pmc/articles/PMC6561774/).

Cipriani, Alessandro, and Maurizio Giri. Electronic Music and Sound Design, Vol. 3: Theory and Practice with Max 8. ConTempoNet, 2023.

Everest, F. Alton, and Ken C. Pohlmann. Master Handbook of Acoustics. McGraw-Hill, 2009.

Gardner, Mark B. “Historical background of the Haas and/or precedence effect.” The Journal of the Acoustical Society of America, vol. 43, no. 6, 1 June 1968, pp. 1243–1248, https://doi.org/10.1121/1.1910974.

“Head-Related Transfer Function.” Wikipedia, Wikimedia Foundation, 2 Nov. 2023, en.wikipedia.org/wiki/Head-related_transfer_function.

Howard, David M. Acoustics and Psychoacoustics. Routledge, 2017.

Learning, Lumen. “Physics.” Hearing | Physics, courses.lumenlearning.com/suny-physics/chapter/17-6-hearing/. Accessed 31 Jan. 2024.

Madsen, E. Roerbaek. “Extraction of Ambiance Information from Ordinary Recordings.” Journal of the Audio Engineering Society, Audio Engineering Society, 1 Oct. 1970, www.aes.org/e-lib/browse.cfm?elib=1481.

Stewart, David. “Timing Is Everything: Time-Aligning Speakers for Your Pa.” inSync, 5 Dec. 2019, www.sweetwater.com/insync/timing-is-everything-time-aligning-supplemental-speakers-for-your-pa/.

Thavam, Sinthiya, and Mathias Dietz. “Smallest Perceivable Interaural Time Differences.” The Journal of the Acoustical Society of America, U.S. National Library of Medicine, pubmed.ncbi.nlm.nih.gov/30710981/. Accessed 31 Jan. 2024.

Thompson, Daniel M. Understanding Audio Getting the Most out of Your Project or Professional Recording Studio. Berklee Press, 2018.

Wallach, Hans, et al. “The precedence effect in sound localization.” The American Journal of Psychology, vol. 62, no. 3, July 1949, p. 315, https://doi.org/10.2307/1418275.

“What Is ‘Haas Effect’?” DPA, www.dpamicrophones.com/mic-dictionary/haas-effect. Accessed 31 Jan. 2024.