In this article, I’m going to break down how I used Udio AI during the songwriting phase of a production, which I then recorded, mixed, and mastered on my own.

Ai-generated music has been a topic of heated discussion this year. We are at the point now where text-to-music can render very impressive results. There are, of course, ethical concerns surrounding using technology that is based off of thousands of hours of others’ art, but I’m going to save that for another article.

The Basics of Udio AI for Music Production

I discovered Udio AI, a text-to-music website, earlier this year, and was immediately impressed with its ability to generate musical ideas in virtually any genre. You can even use it to create soundscapes, or spoken word content. While it can generate lyrical content based on a prompt, I found myself fascinated with how I could input my own lyrics, suggest a genre and instrumentation, with Udio then generating outputs with vocals that were of good (or at least interesting) musical quality. The fidelity still leaves a lot to be desired—it often sounds like a very low quality .mp3 or similarly compressed file. However, considering that Udio is free to use (there is a paid plan with additional features like stem separation that I’ve opted into), one can’t really complain.

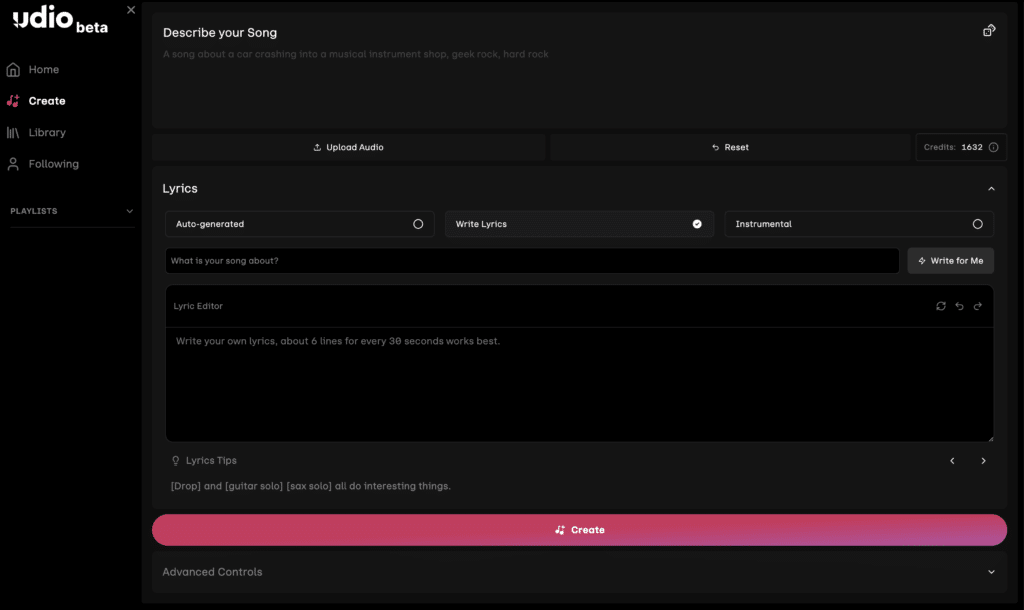

Udio’s Simple User Interface

By default, Udio outputs two 32 second-long generations, which can be extended by adding sections before and after, as well as intros and outros. You can determine song structure by using brackets— [verse],[chorus], [solo], etc.

It’s very rare for Udio to immediately output something that I find usable, but if I hit create 3 times, resulting in 6 generations total, usually 2-3 outputs are inspiring or interesting enough to continue pursuing.

Udio’s Advanced Features, which allow users to fine tune their generations.

After playing around with Udio for weeks, mostly to generate funny songs that would make my friends laugh, I felt inspired to write another humorous song about a “weird old man”, in which the main character sings about fantasizing how an insufferable old man might meet his demise. The verses would serve as backstory about the weird old man, and the choruses would provide specific details about his fate. Using my own lyrics, I created the first verse and chorus several times using some similar prompts. Beneath you can read the lyrics and hear how the prompts affected the initial outputs, which are admittedly, not very good:

[verse]

You’re just a weird old man.

Look at these freaks who would eat from your hand.

Bankrupt and swollen.

Even trash can be Golden.

You’re just a weird old man.

[chorus]

Maybe you’ll stroke out.

Or fall down the stairs.

You’d be dead by now.

If life were only fair.

Lofi rock, underground, guitar, jangle rock:

Jangle pop, ethereal, lofi pop:

These were not great really, but something really struck me when I created a generation using the prompt Lofi pop, jangle, two part harmonies. Have a listen to what Udio titled “Oddities of Life”:

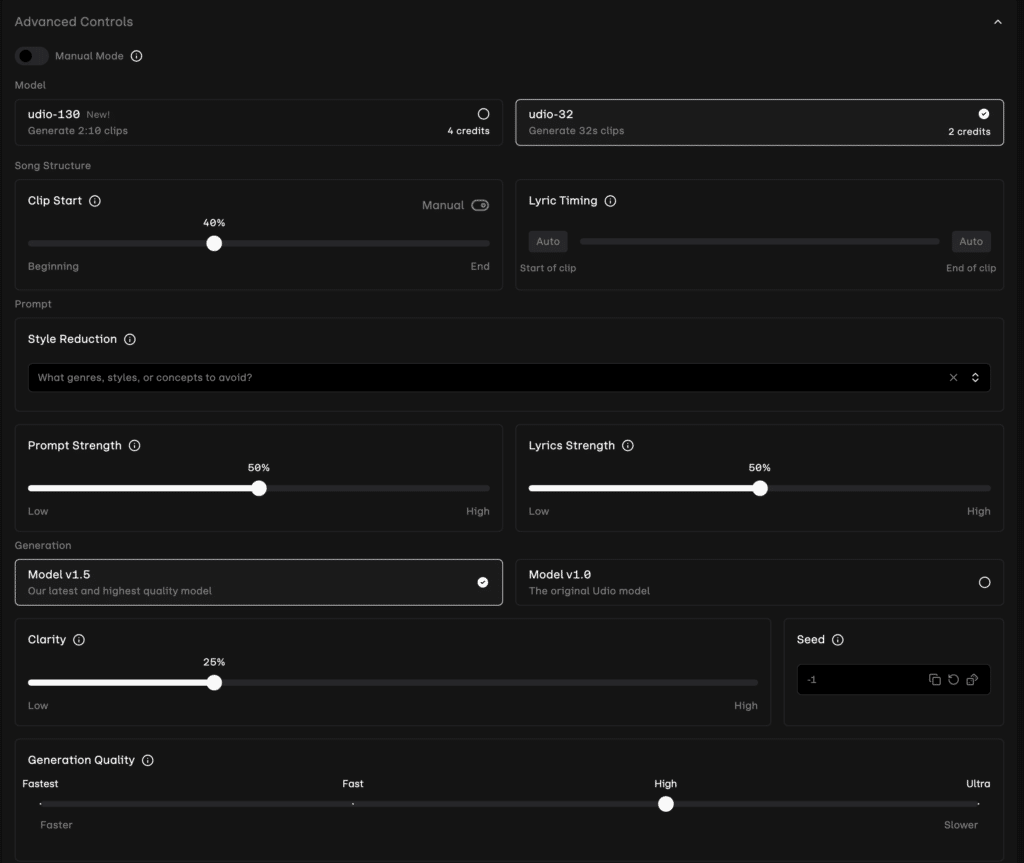

From there, I sought to extend the song to include additional verses and choruses, a bridge, and guitar solo. Without getting too in the weeds, it took a fair amount of generations to get where I wanted. Perhaps an additional chorus didn’t sound enough like the first chorus, or maybe the bridge wasn’t quite right. Regardless, I kept generating extensions and variations (20 to be exact) until it was to my liking at Version 2.2.2.1 which I renamed “Weird Old Man”

I quite like the character of this song! It tells the story that I wanted to tell, but differently than if I were to write the chords and melody myself. During this process, Udio AI felt like a collaborator, not a substitute for my own effort and musical talent.

Some of the many extensions and remixes that went into the production that ultimately became “Weird Old Man”.

MAKING THE SONG MY OWN

Inspired, I decided to see the production through and do a “cover” of sorts. I started by downloading the stems of the final generation and loading them into Ableton Live. To my dismay, the song wasn’t at a set BPM. It would speed up and slow down randomly and somewhat drastically, and while I enjoy the organic musical feel that it created, it would’ve been impossible to build an entire production from it. Using a combination of the warp function in live, and editing the clips so that transients were closer to the grid, I cobbled together a “backing track” at the BPM of 118.7.

I would use this backing track to build the song upon. Here’s how I handled the different production elements.

DRUMS

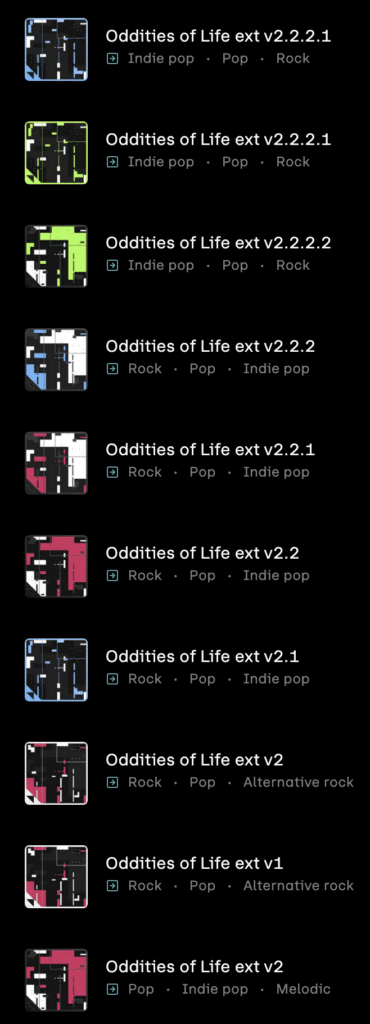

I laid down a MIDI drum performance with the intention of sending it to my friend and collaborator John O’ Reilly Jr. of Boom Crash Drum Tracks. John is an incredibly talented session drummer and engineer, and he provided a full drum multitrack that included 3 excellent takes which I comped together in Pro Tools. Mics include kick, snare, toms, overheads, rooms, and more. You can see the tracks beneath, as well as hear the comparison of my MIDI mockup (Version A) next to John’s final comp (Version B).

The many layers of Drums provided by John O’ Reilly Jr.

GUITARS AND SYNTHS

“Weird Old man” is a decidedly guitar-driven song, which is great because that’s my primary instrument. Figuring out how to play the song exactly as it was generated was nearly impossible because Udio doesn’t really generate based on how music is played. I actually quite like the original guitars. They almost remind me of someone using a cell phone to try and record a performance. There are drop outs, and there is this bizarre warble that I missed in mine. Ultimately, I decided to keep a bit of the original guitar and synth track blended in with mine. Have a listen to how the original output (Version A) compares to mine (Version B) and with them together (Version C). I should note that the original stem from Udio had the mellotron style synth embedded, so you can also hear the Mellotron layers I created, using VSTs from Arturia:

For the choruses, I wanted to embellish on the original arrangement by adding guitars with tremolo, reverb, and delay, creating a lush and almost psychedelic feel.

The guitars in the original bridge were played cleanly (Version A), but I decided to stray from that and use a crunchy guitar tone (Version B.)

I asked Udio to create a guitar solo, but none of them resonated with me, so I wrote one on my own and recorded it on my Reverend Double Agent W with an absurdly fuzzed out guitar tone achieved using my ZVEX Fuzz Factory through a Fender Princeton Amplifier.

Playing my Reverend Double Agent W. Reverend makes fine instruments at an incredible value.

BASS

I loved the groove of the original bass performance (Version A), but it was lacking in low end, clarity, and had warbly artifacts, so replicated it pretty closely using my beloved Hofner Bass (Version B).

VOCALS

Some of the vocal tracks within the session for Weird Old Man, which are comped together, and processed a variety of ways, including “Reamping”.

I’m not a virtuoso singer by any means, but the original generation was in a range I could handle, and the almost “talky” comical delivery style was doable too. Here is a comparison of the original (Version A) and mine (Version B).

I recorded the vocals using my AKG C414 while also holding an SM57, which was heavily processed on the way in. I cobbled together a final take from 17 different takes, many of which were me attempting different sections of the song.

The original also had an “octave up” part which was nearly out of my range, but the pitchy delivery was part of the charm. I used VocAlign to get the octave up performance closer to the lead.

I asked my friend Jason Cummings to lay down some layers in the final chorus, just to add some ear candy to the arrangement.

MIX AND MASTER

The mastering chain on Weird Old Man, which includes tools by Newfangled Audio, FabFilter, Sonnox, Tone Projects, and Plugin Alliance.

I applied all the same techniques to the song that I would for any other mix, using compression, EQ, saturation, reverb, delay, and more to capture the sonics and feeling I was after. I added a “glitched out” section directly after the guitar solo by sending elements including my lead vocal out of Pro Tools and through my Chase Bliss MOOD pedal, piecing together moments that I found compelling. Have a listen to the raw recordings:

You can hear how it all came together here:

The entire mix was run through outboard equipment including my Overstayer MAS for harmonic saturation and my High Voltage Audio EQ6 for minor EQ adjustments. I applied plugins as needed to achieve the dynamics, tonality, and overall loudness I was after.

You can hear the final version, which is available on all major streaming services including Apple Music and Spotify.

The Album Art for the “Weird Old Man” single

SUMMARY OF USING UDIO AI FOR MUSIC PRODUCTION

I understand the hesitancy to use Udio, or any other AI-based tool for creation, and I certainly would never release anything that was made strictly using a tool like this. I will continue to practice more traditional methods of songwriting (I usually write on guitar or piano while singing) and encourage artists to not use AI-tools as a complete substitute for the creative process. There is no substitute for human expression and performance.

That said, producers of music have been using various types of technology to either speed up or enhance what is humanly possibly since the dawn of recorded sound. I don’t view this as much different than using loops from a service like Splice, quantizing a performance to be perfect to a grid, or tuning a vocal to be more in pitch. It’s a tool made to be used, and I see no issue as long as one doesn’t over-rely on it.

After the ideation phase, which I won’t deny was quite reliant on Udio, I put in dozens of hours recording, performing, mixing, and mastering the song. Text-to-music, specifically with Udio, will definitely show up in my bag of production tricks, and I’m fascinated to hear how the software improves as more competitors pop up and technology advances.